What is the NVIDIA HGX Platform?

How did NVIDIA HGX revolutionize AI?

NVIDIA HGX revolutionized AI by providing a scalable and flexible architecture designed to accelerate deep learning and high-performance computing (HPC) workflows. By integrating powerful GPUs and high-speed interconnect technology, the HGX platform offers unprecedented computational power and data throughput. This enables researchers and developers to train larger models, perform more complex simulations, and analyze vast datasets with increased efficiency and speed. Additionally, NVIDIA HGX supports a wide range of AI frameworks and libraries, facilitating streamlined integration and deployment across various applications.

View FiberMall for More Details

What makes NVIDIA HGX purpose-built for the convergence of simulation, Deep Learning, and Data Analytics?

- Unified Architecture: NVIDIA HGX combines GPU-accelerated computing with high-speed networking, creating a cohesive platform that supports diverse workloads including simulation, deep learning, and data analytics.

- Scalability: The platform is designed to scale from single-node systems to multi-node clusters, making it suitable for both small-scale experiments and large-scale deployments.

- Optimized Software Stack: NVIDIA HGX integrates with NVIDIA’s CUDA, cuDNN, and TensorRT libraries, as well as popular AI frameworks such as TensorFlow and PyTorch, ensuring maximum compatibility and performance.

- High-Speed Interconnects: The platform utilizes NVLink and Infiniband for fast, efficient data transfer between GPUs, reducing bottlenecks and improving overall system performance.

- Advanced Cooling Solutions: Engineered for optimal thermal management, NVIDIA HGX systems maintain peak performance even under heavy computational loads.

If you are looking for more information about Nvidia hgx go here right away.

Why choose NVIDIA HGX for an unmatched end-to-end accelerated computing platform?

- Superior Performance: NVIDIA HGX delivers exceptional processing power, enabling faster training times and more accurate AI models.

- Versatility: The platform supports a wide range of applications, from traditional HPC tasks to cutting-edge AI research and development.

- Reliability: Built with enterprise-grade components, NVIDIA HGX ensures high availability and minimal downtime.

- Comprehensive Support: NVIDIA provides extensive documentation, development tools, and customer support, helping organizations maximize their investment in HGX technology.

- Future-Proof: With continual updates and support for the latest advancements in GPU technology, NVIDIA HGX is designed to meet the evolving needs of AI and HPC workloads.

How does NVIDIA HGX H100 compare to HGX A100?

What are the key features of NVIDIA HGX H100?

- Next-Gen GPUs: Equipped with the latest NVIDIA H100 Tensor Core GPUs, offering unprecedented performance for diverse workloads.

- Enhanced Scalability: Supports up to 8 GPUs per node and seamless multi-node scaling capabilities to handle even the most demanding computational tasks.

- Improved Precision: Includes advanced capabilities for mixed-precision computations, optimizing both performance and accuracy for AI and HPC applications.

- Energy Efficiency: Designed with power efficiency in mind, providing optimal performance-per-watt metrics.

- Flexible Topology: Configurable interconnect topology permits flexible deployment options to meet varied performance and connectivity requirements.

How do A100 GPUs enhance computing performance?

- Third-Generation Tensor Cores: Incorporate improvements for AI training and inference, delivering up to 20x higher performance than predecessors.

- Multi-Instance GPU (MIG) Technology: Enables multiple networks to share a single A100 GPU, maximizing GPU utilization and efficiency.

- HBM2 Memory: Provides ample bandwidth and low latency, facilitating high-speed data access and processing.

- Structural Sparsity: Leverages sparsity patterns in data to improve performance without compromising accuracy.

- Dynamic GPU Resource Allocation: Adaptively utilizes GPU resources to optimize performance for diverse workloads, ranging from traditional compute to AI tasks.

Can NVIDIA HGX H100 accelerate deep learning performance?

- Advanced Tensor Cores: Supports diverse data types and precisions, enhancing training speed and inference accuracy for deep learning models.

- Expanded Framework Support: Compatible with leading deep learning frameworks, such as TensorFlow, PyTorch, and MXNet, simplifying deployment and scaling.

- Data Parallelism: Enables efficient, large-scale model parallelism and data parallelism, reducing training times.

- Unified Memory Architecture: Integrates with NVIDIA’s memory hierarchy to support seamless data transfer and reduced latency.

- Accelerated Libraries: Takes advantage of optimized software libraries like cuDNN and TensorRT to maximize deep learning performance.

These key features underscore the NVIDIA HGX H100’s capabilities in delivering superior computational performance and enhanced efficiency for AI and HPC applications.

How does NVIDIA Infiniband Networking improve HGX performance?

- High Throughput: Infiniband offers superior data transfer speeds, significantly higher than traditional Ethernet, facilitating rapid communication between nodes in a network.

- Low Latency: Delivers minimal latency, which is crucial for time-sensitive AI and HPC applications, ensuring faster processing and response times.

- Scalability: Provides robust support for scaling up large clusters with thousands of nodes, making it ideal for extensive and complex AI and HPC workloads.

- Quality of Service (QoS): Enables granular control over traffic prioritization, ensuring that critical tasks receive the necessary bandwidth and minimal latency.

- Offloading Capabilities: Offloads various network transport functions from the CPU, freeing up computational resources and improving overall system performance.

- Reliability and Resilience: Infiniband’s architecture includes advanced error detection and correction mechanisms, enhancing system reliability and data integrity.

By leveraging these advantages, Infiniband significantly enhances the efficiency of AI and HPC workloads, enabling faster computations, improved resource utilization, and greater scalability to meet the demands of modern data-intensive applications.

What are the key components of HGX Powered by A100 80GB?

What role do tensor core GPUs play?

Tensor core GPUs are pivotal in accelerating AI and HPC workloads by enabling faster matrix computations, which are fundamental to deep learning and neural network training. They are designed to perform mixed-precision calculations, allowing for the efficient handling of large data sets and models. This results in substantial improvements in performance and power efficiency, making them an essential component in modern computing frameworks that require high precision and speed.

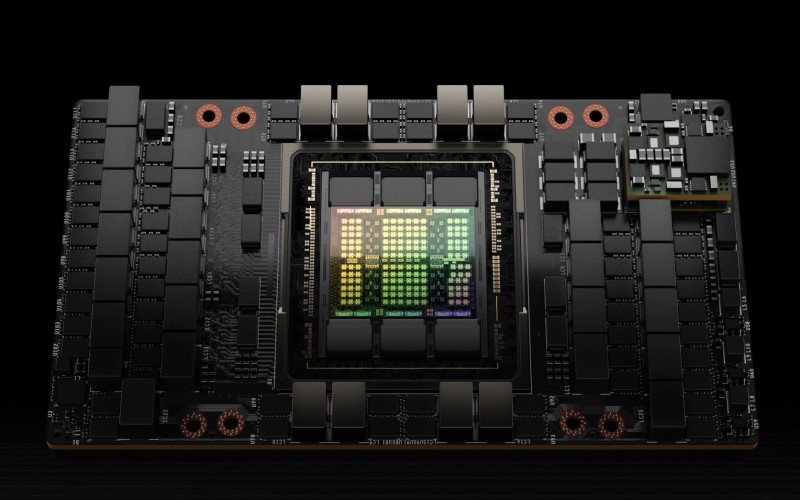

Why is HGX considered a new unit of computing?

HGX is considered a new unit of computing due to its ability to integrate multiple GPUs in a unified architecture, effectively creating a supercomputer within a single platform. This integration enables unprecedented levels of computational power and scalability, which are necessary for tackling the most complex AI and HPC challenges. HGX brings together innovative technologies such as NVLink and NVSwitch, providing a cohesive and efficient system that substantially surpasses traditional computing architectures in terms of performance and capability.

How do NVLink and NVSwitch enhance performance?

- High-Speed Interconnect: NVLink provides a high-bandwidth, low-latency interconnect between GPUs, ensuring that data can be transferred quickly and efficiently within the system.

- Scalability: NVLink and NVSwitch allow for seamless scaling of multiple GPUs, facilitating the construction of extensive GPU clusters that can handle large-scale AI and HPC workloads.

- Peer-to-Peer Communication: These technologies enable direct GPU-to-GPU communication without involving the CPU, reducing latency and increasing overall system throughput.

- Efficient Resource Utilization: By offloading data transfer tasks from traditional system buses, NVLink and NVSwitch free up resources, allowing for more efficient and effective use of computational capabilities.

- Enhanced Bandwidth: NVSwitch complements NVLink by providing a central hub that connects multiple NVLinks, further enhancing bandwidth availability and inter-GPU communication efficiency.

By leveraging these technologies, HGX platforms deliver superior performance, enabling faster computations, greater computational density, and enhanced efficiency for AI and HPC applications.

What benefits do businesses gain from deploying NVIDIA HGX AI Supercomputing Platform?

How does NVIDIA HGX improve data center efficiency?

- Optimized Power Consumption: NVIDIA HGX platforms are designed to deliver high computational power while minimizing energy usage, leading to more sustainable and cost-effective data centers.

- Space Efficiency: By integrating multiple GPUs into a unified platform, HGX reduces the need for additional hardware, thus saving valuable rack space within the data center.

- Reduced Latency: The advanced interconnect technologies such as NVLink and NVSwitch minimize data transfer times, ensuring quick processing and reduced latency for data-intensive applications.

- Simplified Management: HGX platforms come with unified software stacks that streamline the deployment and management of AI and HPC workloads, reducing operational complexity and improving administrative efficiency.

- Scalability: HGX’s architecture allows for easy scaling, facilitating expansions and upgrades without significant infrastructure overhauls, ensuring the data center can grow with the increasing demands.

What are the use cases of HGX A100 in real-world applications?

- AI Training and Inference: HGX A100 is used extensively for training large-scale AI models and delivering rapid inference, significantly reducing the time required to develop and deploy AI solutions.

- High-Performance Computing (HPC): Suitable for scientific simulations, weather forecasting, and genomic research, the HGX A100 accelerates computations, allowing researchers to achieve results faster.

- Data Analytics: By accelerating data processing tasks, HGX A100 enables more rapid analysis of large datasets, helping businesses gain insights in real-time.

- Machine Learning Operations (MLOps): Facilitating the deployment and management of machine learning models, HGX A100 helps streamline MLOps pipelines, increasing productivity and efficiency.

- Virtual Desktop Infrastructure (VDI): HGX A100 supports high-performance virtual desktops, ensuring seamless performance for remote workers and enhancing the overall user experience.